Pablo Romeo

Co-Founder & CTO

The only constant is change. In life, and also in the world of Generative AI. New models and AI-powered tools are born almost daily, and equally, many of them die. Not to be dramatic, but it’s true: advances in AI can render many tools and solutions that are only months old obsolete. This pace is accelerating.

For decision-makers, this relentless evolution is both exciting and intimidating. In fact, most people share this sentiment. If you’re responsible for implementing AI in your organization, it’s only natural to approach with caution. The most common concern I hear from clients is: “I’m afraid to invest in an AI solution today that’s already obsolete by tomorrow.”

The answer depends on how you build. The key to a wise investment is designing solutions that don’t just survive the rising tide of innovation, but float with it and use it to set sail. There are proven strategies to architect AI solutions that evolve alongside technology, ensuring your investment remains future-ready.

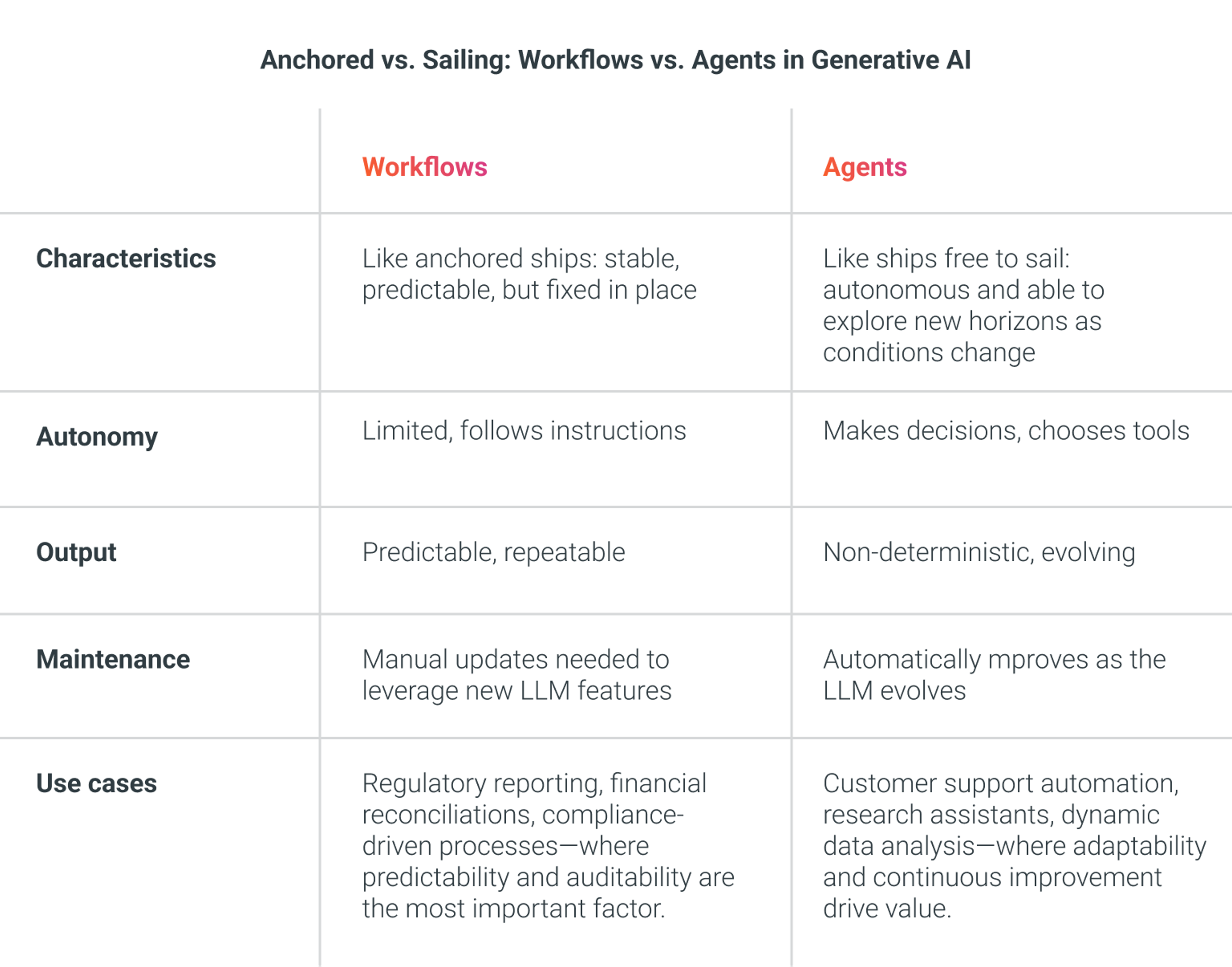

Imagine your AI application as a ship in a harbor. An anchored ship, while afloat, is fixed in place. No matter how favorable the winds become, it can’t set sail to explore new horizons. In contrast, a ship that is free to set sail can move with the tide and harness the wind, navigating to new destinations as the environment evolves.

There are two primary approaches to building generative products: workflows and agents. Workflows are like anchored ships; agents, on the other hand, are free to sail.

Workflows rely heavily on traditional programming techniques, where the developer writes code to tightly control every step. The LLM (Large Language Model) is typically given specific instructions and limited autonomy. While this high degree of control enables a high degree of confidence in the program’s outputs, it also means the solution won’t fully leverage the benefits of LLM improvements as they occur.

The primary advantages of workflows are their determinism (outcomes are predictable and repeatable) along with a reduced risk of “hallucinations” or errors, and streamlined compliance and auditability. Yet, this rigidity comes at a cost. Workflow-based applications are static, unable to leverage new capabilities as models evolve, and often require significant manual updates to remain relevant as technology advances.

Agentic solutions delegate more autonomy to the LLM. Here, the AI acts as an agent: it makes decisions, chooses tools, and determines the best path to solve a problem. The developer still has an important role: providing the agent with a wide range of tools, which the agent can use autonomously as needed.

This design enables agents to adapt as the underlying LLM evolves. If the model improves its decision-making capabilities, your application’s capabilities improve as well. This approach sparks innovation, because the agent can discover new, more efficient ways to deliver value. As LLMs improve, these solutions become more powerful, often without a single line of code changed.

However, agentic solutions are inherently non-deterministic, reflecting the probabilistic nature of AI. This introduces the potential for errors or unexpected behaviors, requiring error-handling strategies different from those used in traditional programming. Fortunately, there are proven techniques to minimize erroneous outputs in agentic systems, which I will discuss later.

There is also a cultural shift required. Developing agentic solutions challenges developers accustomed to tight control. Software engineers are trained to break down complex problems into manageable parts and tackle each systematically. Agentic design requires delegating some of that control to the LLM. But consider this: would you prefer to micro-manage every employee, or empower them with the tools and trust to innovate? The same logic applies to AI. Agentic solutions are about equipping an “artificial brain” with the freedom and resources to excel as its capabilities advance.

Not every use case is suited for agentic design. For example, a compliance-heavy process (for example, a government form submission) benefits from a strict step-by-step workflow. Here, consistency and accuracy are the most important factors, making a structured workflow not just preferable, but mandatory.

On the other hand, agentic solutions are ideal for many scenarios. Coding agents, for example, are among the most widely adopted today because they are designed as autonomous problem-solving entities that can dynamically plan and execute actions across an unknown codebase. Another example is our own Talk to Database, where the LLM interprets user intent, decides which queries to run or which API requests to make, and chooses how to present the output—whether as a table, a graphic, plain text, or another format.

Agentic solutions can’t be fully deterministic, because the nature of AI is inherently stochastic. However, there are proven strategies to minimize errors, keep AI away from tasks it doesn’t perform well, and leverage its full potential where it excels:

These are just a few of the techniques we employ at CloudX to ensure agentic solutions are both innovative and reliable. We’ll explore these methods in depth in a future article.

If you’re on the fence about investing in AI, I hope this article has helped clarify your doubts and alleviate your concerns. Caution is wise, but with the right strategy, your investment can be future-proofed. The Generative AI tide will keep rising; the question is: will your applications rise with it, or just sink?

Insights

A leading multinational finance firm has teamed up with CloudX to roll out a secure AI agent to 250 employees, enabling them to harness the power of advanced…

Success Case

Leveraging Generative AI, we automated the extraction of data from 3,000 to 7,000 financial documents yearly for a global professional services firm, saving…

Insights

Integrating Generative AI into enterprise products is a game-changer for businesses in 2025. At CloudX, we employ a strategic and structured approach to…