Pablo Romeo

Co-Founder & CTO

Welcome to the second article in our series on evolving Generative AI solutions, from early-stage to enterprise-grade. In our previous article, we explored the foundational steps required to move beyond Proof of Concept and establish a robust enterprise baseline.

Today, we turn our attention to the next set of challenges: scaling AI systems, managing enterprise data at scale, and building resilient infrastructure. We’ll also examine best practices for testing Generative AI solutions and ensuring Responsible AI principles are embedded throughout the deployment lifecycle. In our final article, we’ll address performance optimization and cost management, equipping you with a comprehensive roadmap for enterprise AI success.

Building enterprise AI is much like the evolution of self-driving cars. Decades ago, autonomous vehicles were tested in highly controlled environments, such as empty tracks and predictable routes. Yet, real-world deployment demands that these vehicles navigate chaotic city streets, unpredictable situations, and unfamiliar territories, where the stakes are much higher and mistakes can have severe consequences.

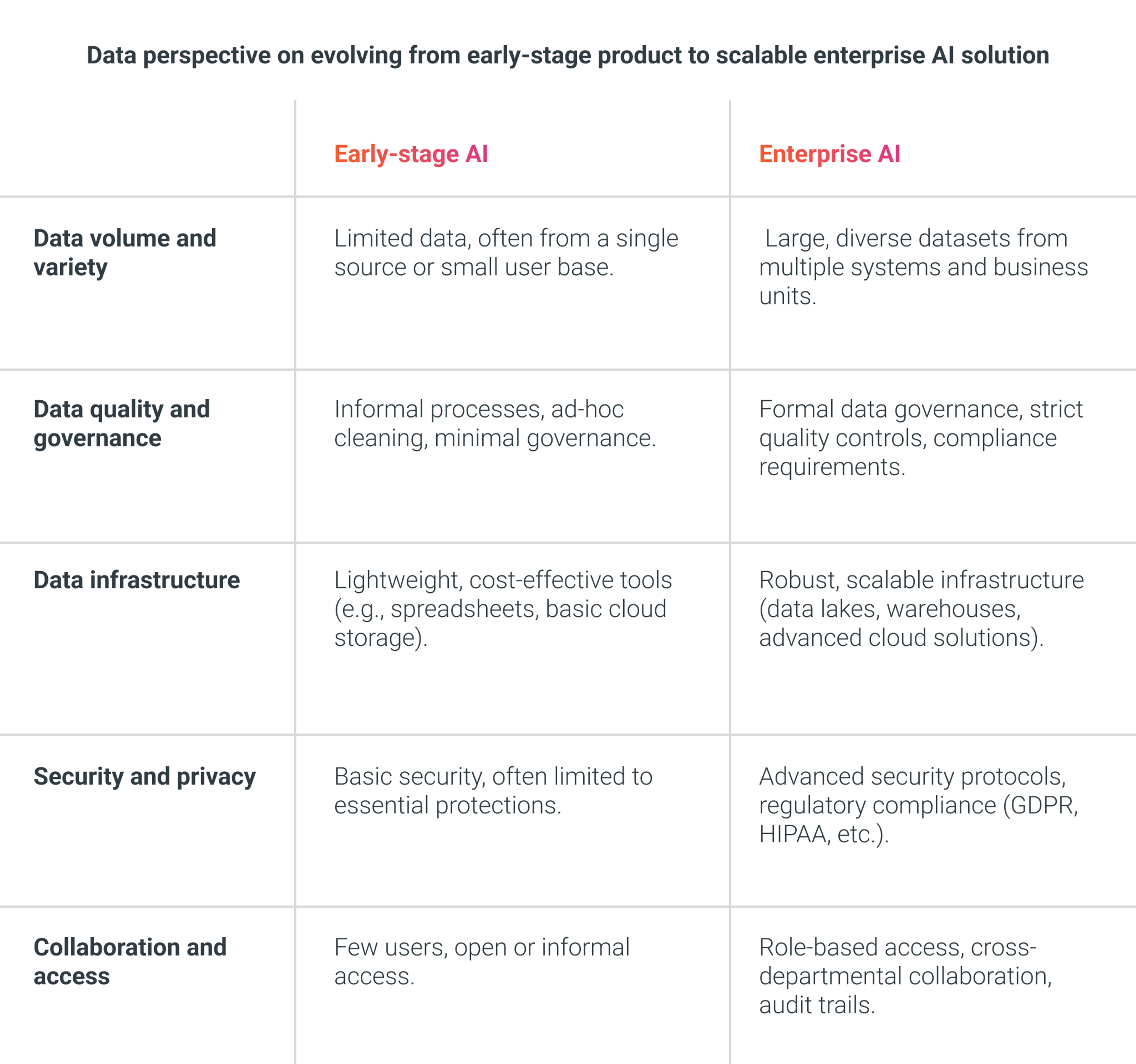

Similarly, Proofs of Concept (PoCs) and Minimum Viable Products (MVPs) for AI typically rely on curated datasets and controlled conditions to validate ideas quickly and efficiently. However, transitioning to enterprise-scale production requires AI systems to perform reliably in the complexity of real-world environments, well beyond the comfort of controlled testing. In production, data is often messy, incomplete, and inconsistent. Ensuring data quality and diversity at scale becomes a foundational requirement. Generative AI models depend on vast and varied datasets to deliver coherent, reliable outputs across a wide range of scenarios. For enterprise AI solutions, it’s critical to move beyond the neatly-prepared samples of early development and expose models to the complexity of real-world data.

Another key distinction between early-stage and enterprise deployments is the scale and velocity of data. Enterprise AI systems must efficiently process large volumes of often unstructured data, requiring robust pipelines and scalable storage solutions. What works for a few thousand records in a prototype must be engineered to handle millions of records or real-time data streams in production. As data volumes and transaction rates increase, new challenges emerge—challenges that rarely surface in controlled PoC environments.

Best practices include designing efficient data ingestion pipelines, selecting the right data repositories or streaming systems to manage continuous updates, and architecting for horizontal scalability so the system can seamlessly accommodate growing demand. Integrating data from multiple sources into consistent formats is essential yet often overlooked in early-stage development. In production, data handling must evolve into a comprehensive, scalable strategy that addresses ongoing data cleaning, updates, and growth.

Deploying an enterprise AI solution introduces a new level of infrastructure complexity, requiring infrastructure that can guarantee high availability, reliability, and optimal performance. While a PoC may operate in a controlled, sandboxed environment, production deployment demands a sophisticated, scalable approach: containerization, orchestration, CI/CD (Continuous Integration/Continuous Deployment), cloud scalability, and seamless integration with existing enterprise systems.

Moving beyond foundational infrastructure, agentic solutions introduce additional operational challenges. For example, when deploying agents that execute long-running tasks and stream progress, standard deployment strategies can quickly become inadequate. Rolling out a new version while several agents are actively processing risks disrupting ongoing operations. Addressing these scenarios requires infrastructure and operational practices specifically designed to minimize disruption and maintain service continuity for complex, long-lived workloads, such as the Rainbow Deployments strategy implemented by Anthropic for their multi-agent research system.

Operational excellence in enterprise AI also depends on adopting DevOps, MLOps, and LLMOps (Large Language Model Operations) practices. Organizations must establish CI/CD pipelines to automate testing and model updates, ensuring seamless transitions across development, staging, and production environments.

Scalability is non-negotiable. Early-stage products may handle limited traffic, but enterprise AI solutions must support thousands of concurrent requests with minimal latency. This requires infrastructure designed for auto-scaling, load balancing, and fault tolerance. Leveraging managed AI services or cloud-native infrastructure enables on-demand scalability and high availability, allowing engineering teams to focus on delivering business value rather than managing infrastructure overhead.

Testing Generative AI solutions requires a fundamentally different approach than traditional software testing. Instead of verifying that outputs match expected values exactly, evaluation must account for nuance, variability, and probabilistic outcomes. The goal is not binary correctness, but rather to determine whether outputs are “good enough” according to well-defined, business-relevant criteria.

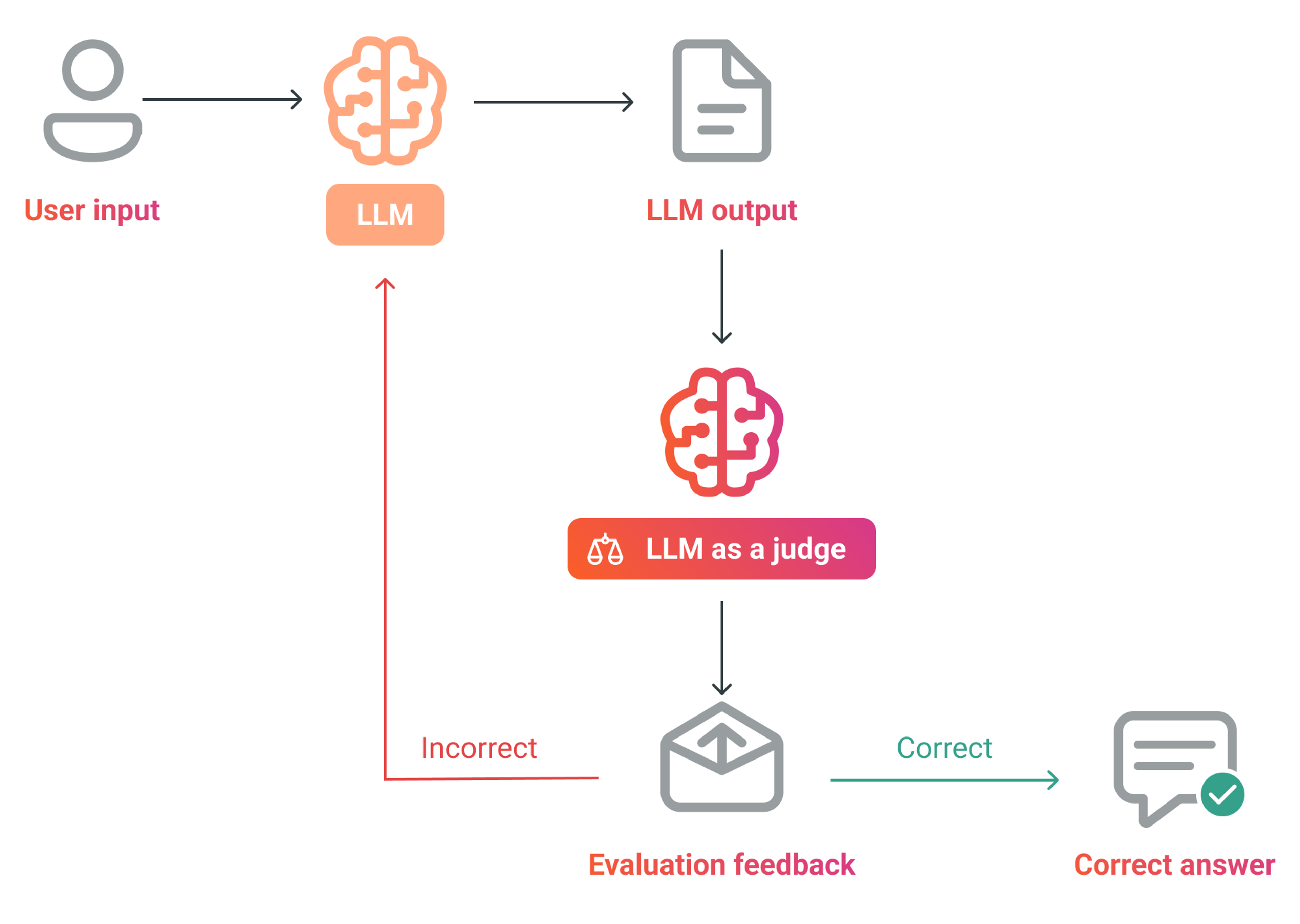

Traditional testing methods are supplemented by AI-specific evaluations, commonly referred to as evals. Evals are structured frameworks designed to benchmark model performance across dimensions such as output correctness, relevance, coherence, bias, factual accuracy, and overall quality. These evaluations often leverage curated datasets of expected inputs and outputs, and may even use Generative AI to validate results at scale.

A notable practice in this space is the use of LLM as a judge. This technique employs an LLM to automatically assess the output of another LLM or agentic solution, delivering subjective but scalable judgments on performance. LLMs as judges are particularly effective for use cases such as verifying context utilization in RAG systems, conducting comparative evaluations between different models, and screening for toxicity, bias, or factual inaccuracies. While this approach accelerates and scales the evaluation process, human oversight remains essential. Continuous human-in-the-loop review is required to validate LLM-based assessments and refine evaluation prompts, ensuring the integrity of the testing process.

Regression testing for enterprise AI products presents unique challenges due to the non-deterministic nature of generative models, as identical inputs can yield different outputs across runs. To mitigate this, it’s critical to establish a robust baseline: a dataset of representative questions and high-quality expected answers, or an automated eval pipeline that flags drops in accuracy or quality with each new release. This approach helps maintain consistency and reliability as the solution evolves.

Enterprise AI solutions must be engineered for safety, security, and compliance from the outset. While early-stage products may prioritize speed and functionality (often using mock data and minimal controls), enterprise AI deployments demand rigorous adherence to legal, regulatory, and ethical standards. As these systems frequently process sensitive user data or proprietary business information, strict compliance with data privacy laws such as GDPR or HIPAA is non-negotiable.

LLMs present certain risks, such as the potential for memorizing and inadvertently exposing personally identifiable information (PII) or confidential company data. To mitigate these risks, AI development teams must audit training data for PII, implement robust safeguards to prevent data leakage, and ensure that any customer data processed in production is protected or anonymized. It’s essential to implement secure data pipelines where PII is obfuscated prior to model ingestion and deobfuscated only when necessary. In some cases, regulatory or security requirements may necessitate deploying open-source models on-premises, rather than leveraging third-party LLMs.

System logs also require careful management. Effective monitoring and observability are critical for operational excellence, but must never compromise data privacy or security. Sensitive information should be excluded or anonymized in logs to prevent accidental exposure. I’ll delve on monitoring and observability in the next article of this series.

Beyond privacy and security, organizations must navigate a complex and evolving regulatory landscape. New frameworks such as the EU AI Act and sector-specific guidelines require that AI systems do not generate illegal, discriminatory, or otherwise harmful content. Implementing Responsible AI frameworks is essential. This includes systematic bias testing, building explainability into model outputs, and ongoing monitoring for unfair or unintended outcomes. Ensuring transparency is increasingly important, with some regulations and customer expectations requiring clear disclosure when content is AI-generated and ensuring that decision processes are explainable.

To meet these requirements, organizations establish robust governance structures, such as AI ethics committees and compliance checklists, to review models both before and after deployment. These measures ensure that enterprise AI solutions not only deliver business value, but also uphold the highest standards of trust, accountability, and regulatory compliance.

Establishing a scalable, resilient infrastructure is foundational to the success of enterprise AI initiatives. By prioritizing robust data management, comprehensive testing, and strict compliance, organizations can deploy AI solutions that are both secure and reliable, delivering measurable business value.

In the final article of this series, we’ll examine strategies for optimizing performance and managing costs, equipping you with practical insights to maximize the return on your AI investments.

Insights

Bridging the gap between a Generative AI prototype and an enterprise AI solution demands more than technical tweaks: it requires a disciplined, strategic…

Insights

Discover how to drive lasting value from enterprise AI solutions in the third and final part of our series. Learn proven strategies for monitoring,…

Success Case

CloudX delivered an AI-powered platform that transforms legal contract management for a global firm. By automating the analysis and standardization of complex…