Pablo Romeo

Co-Founder & CTO

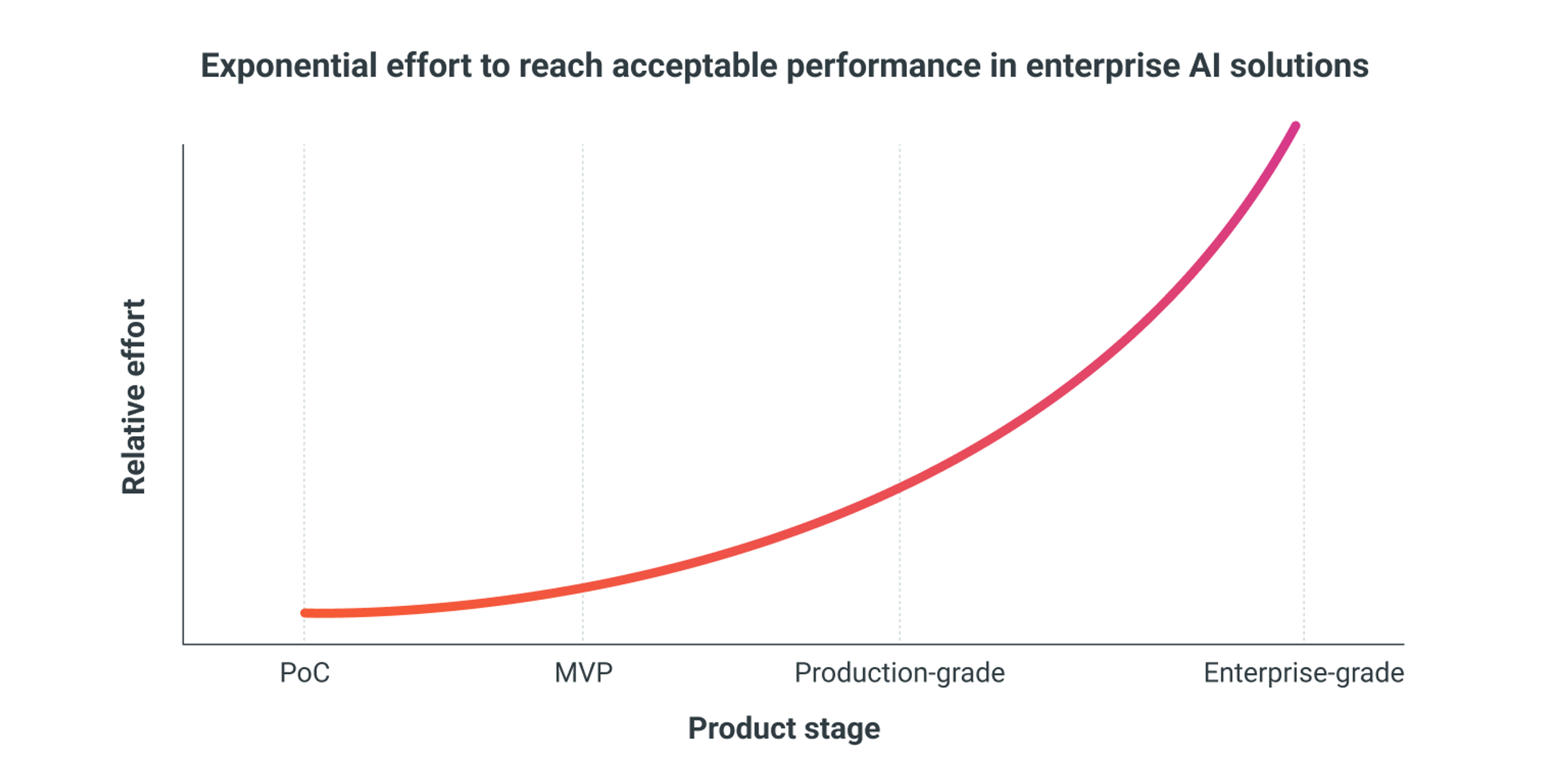

Bridging the gap between an early-stage generative product and a robust enterprise AI solution is far more challenging than most people anticipate. It’s a common misconception that a working prototype means you’re halfway to the finish line. In reality, the leap to enterprise AI demands a fundamentally different approach, one that exposes hidden complexities and requires rigorous engineering at every step. Why is this transition so demanding, and what does it truly take to deliver reliable, scalable AI at enterprise scale? Let’s examine the realities behind the exponential effort curve that separates promising demos from production-grade solutions.

Whether you’re working with a Proof of Concept (PoC), a Minimum Viable Product (MVP), or a more advanced solution that hasn’t yet reached enterprise maturity, the path to enterprise scale introduces a new set of challenges. Early-stage products are designed to prove feasibility with minimal scope and limited requirements. By contrast, enterprise-grade AI systems must deliver consistent reliability, integrate seamlessly with existing business processes, and meet rigorous operational standards. Even if your initial implementation works well enough, the climb to true enterprise readiness is steep.

Welcome to the first installment of our series on evolving Generative AI from early-stage prototypes to robust enterprise solutions. In this article, we’ll examine what it takes to move beyond basic functionality, focusing on fine-tuning, grounding, and the implementation of guardrails. In the coming articles, we’ll explore additional pillars of enterprise AI, so I encourage you to stay tuned.

Building a Generative AI demo or PoC is relatively straightforward, but this simplicity can be deceptive. Each subsequent development phase introduces increasing complexity. For example, while Retrieval Augmented Generation (RAG) PoCs can be quickly assembled using online tutorials, transitioning from a merely acceptable RAG result to achieving truly acceptable accuracy demands monumental effort.

If you’re unfamiliar with RAG, it’s a technique for enriching model responses with relevant information from a curated set of external sources, such as documents, presentations, or spreadsheets. For a deeper dive into RAG and other foundational terms, see our previous article on key concepts of Generative AI.

Regardless of whether your solution uses RAG, the previous example highlights the complexities involved in building reliable and predictable AI software. In enterprise environments, consistency, reliability, predictability, and the absence of hallucinations (plausible-sounding but incorrect answers) are non-negotiable. Entry-level implementations rarely meet these standards.

Large Language Models (LLMs) can be misleading to those new to the field. Out of the box, they may appear to “think,” but they’re prone to hallucinations and unpredictable failures. In many cases, a pre-trained model might be insufficient for specialized tasks, requiring the development of a custom model. Developing generative products is an iterative process, more so than traditional development. We aim for an initial implementation that works well enough, then evolve it to enhance consistency and predictability. It’s a delicate balance, as even minor feature additions can disrupt previously stable functions, making the process anything but straightforward. Experimentation is essential, as optimal prompts, required integrations, and even final solution costs cannot always be predicted beforehand. The maturity curve for enterprise AI is steeper and more demanding than for conventional applications.

Given these realities, it’s critical to select a technology partner with proven expertise in delivering enterprise-grade Generative AI solutions. The right partner ensures your investment translates into measurable business value and long-term ROI.

To achieve this, an enterprise AI solution must address:

It’s a substantial undertaking. In this article, we’ll focus on the first two pillars: model fine-tuning and grounding, and implementing effective guardrails. Let’s examine each in detail.

In the PoC phase, teams typically rely on pre-trained generative models (such as GPT variants) paired with basic prompt engineering to validate feasibility. While this approach can demonstrate initial potential, it falls short of the rigorous standards required for enterprise AI deployment. At the enterprise level, the model must be purpose-built for specific business tasks and consistently deliver high accuracy and quality.

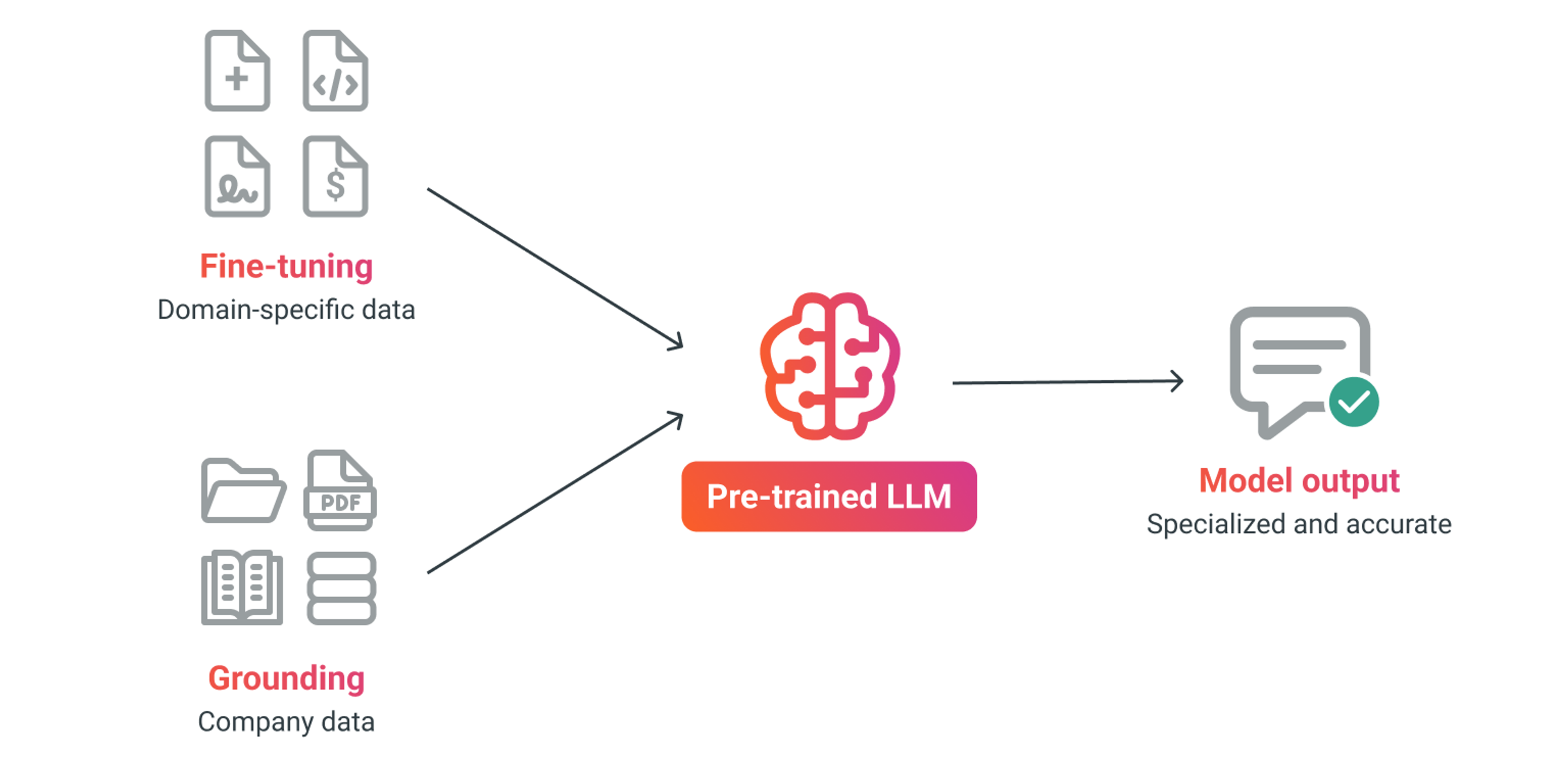

To achieve this, Generative AI teams employ two core techniques: fine-tuning and grounding.

Fine-tuning customizes the model using domain-specific data, transforming a general-purpose LLM into a specialized tool for targeted business scenarios. This approach boosts both relevance and accuracy. For example, in healthcare, fine-tuning with clinical notes enables precise medical summaries, while in finance, training on transaction records ensures outputs meet compliance standards. If your use case requires structured JSON, legal drafting, or code in a defined style, fine-tuning with real examples ensures the model consistently delivers the right format, reducing errors and post-processing.

Grounding further enhances accuracy by anchoring the model’s responses in use-case-specific information. Techniques such as RAG are widely adopted in enterprise AI for this purpose. Rather than retraining the entire model, RAG dynamically injects relevant company data sourced from documents, databases, or other repositories into the system prompt, ensuring outputs are contextually accurate and aligned with organizational knowledge.

Achieving production-grade accuracy is an iterative process. It requires ongoing adjustments to training data, prompt refinement, and experimentation with different model variants until performance metrics meet enterprise thresholds. Addressing LLM-specific challenges such as hallucinations or inconsistent outputs is essential. These issues, often tolerated in early-stage products, must be systematically minimized to ensure reliability and trust in enterprise AI applications.

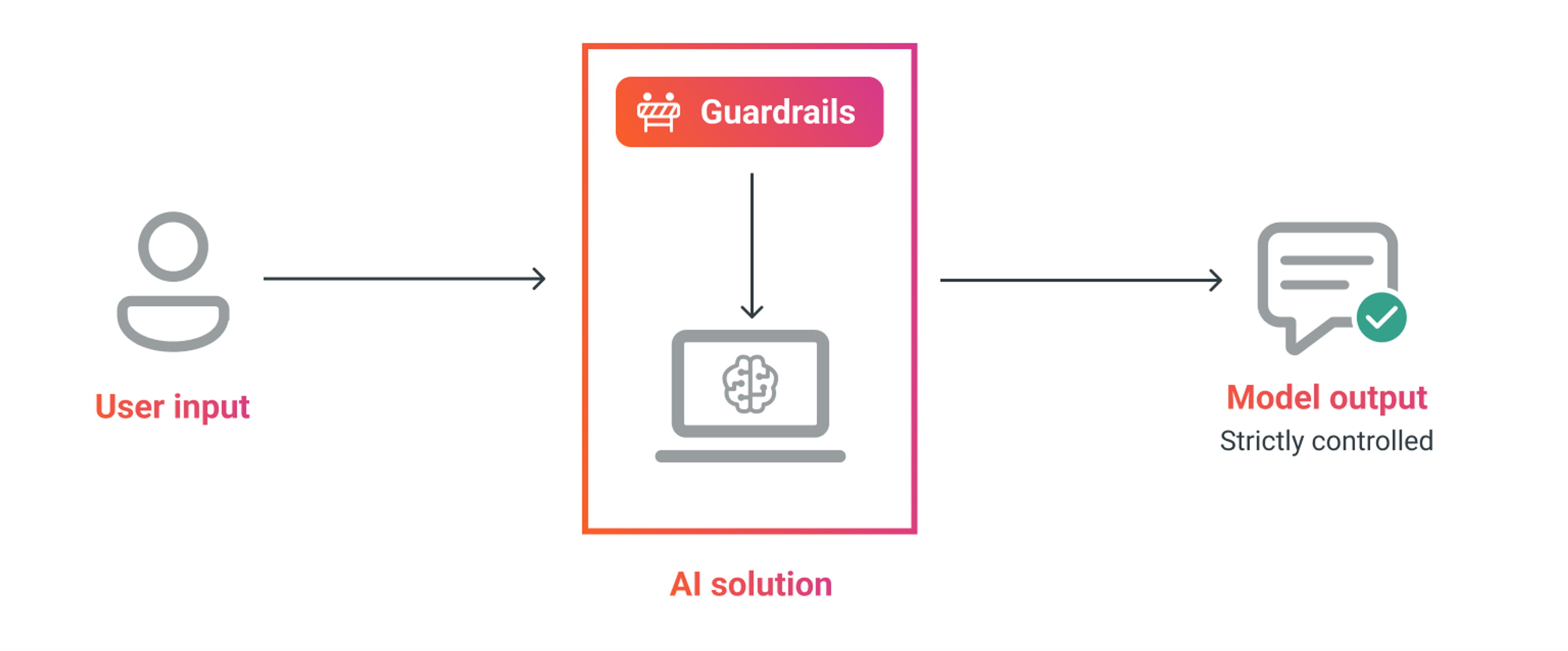

In enterprise environments, establishing robust guardrails is essential for managing AI-human interactions and safeguarding organizational integrity. Guardrails ensure the AI operates strictly within its defined scope, preventing it from engaging in topics or actions outside its intended function. For example, if a user queries a corporate knowledge base for React code, the system should redirect the request to a more suitable platform, such as ChatGPT, rather than attempting to generate code in an unsupported context. Equally important, the model must avoid discussing sensitive topics or inadvertently disclosing information embedded in system prompts.

Effective guardrails can take several forms: enforcing strict answer validation rules, limiting response length, or integrating fallback logic when the model’s confidence is low. These measures help maintain control over the AI’s outputs and reduce the risk of unintended consequences.

Controlling the agent’s actions and decisions is particularly critical when it comes to preventing hallucinations. The 2024 Air Canada incident, where an AI chatbot provided misleading information to customers, underscores the real-world risks of inadequate output control. To maintain accuracy and reliability, enterprise AI solutions must ensure traceability from source data to final response. Source attribution compels the model to ground its answers in verifiable information, providing explicit justifications for its recommendations. Additionally, chain-of-thought prompting guides the model through logical reasoning steps, enabling systematic verification and transparent decision-making.

While advanced reasoning models (such as DeepSeek R1 or OpenAI’s o3) offer enhanced logical capabilities, they often come with higher costs and slower response times. For many enterprise AI applications, optimizing traditional LLMs through expert development can achieve comparable results with greater efficiency. Carefully evaluating the cost-benefit tradeoff is essential to ensure the chosen approach aligns with business objectives and operational constraints.

Transitioning from an early-stage generative product to an enterprise AI solution is a complex undertaking that demands rigorous attention to detail. By addressing foundational elements such as fine-tuning, grounding, and robust output control, organizations can lay the groundwork for reliable, scalable AI deployments that deliver measurable business value.

In the next installment, we’ll examine the critical challenges of scaling, infrastructure, testing, and compliance, providing insights into building resilient, enterprise-ready AI systems.

Insights

Evolving a Generative AI product from pilot to production is only half the equation. Enterprise AI must deliver reliability and availability, without…

Insights

Discover how to drive lasting value from enterprise AI solutions in the third and final part of our series. Learn proven strategies for monitoring,…

Insights

Integrating Generative AI into enterprise products is a game-changer for businesses in 2025. At CloudX, we employ a strategic and structured approach to…