Pablo Romeo

Co-Founder & CTO

When was the first time you heard about Artificial Intelligence?

The 2020s came with new advancements in this field, led by what we call Generative AI. But I am pretty sure that was not the first time you heard about AI—it was probably many years ago. We have been talking about Artificial Intelligence for at least half a century. So, what is the hype? How is the new AI different from traditional AI?

In many conversations I had with clients during the past months, I noticed this is a topic that often leads to confusion. In this article I will clarify the differences between Generative AI and traditional AI. In addition, you will learn about several tools that coexist inside the AI ecosystem, such as Supervised Learning, Unsupervised Learning, Reinforcement Learning, and of course Generative AI. These different techniques complement each other and offer different approaches to AI, while being suitable for different applications.

Andrew Ng explained in a talk for Stanford University that AI is not just one tool, but a collection of tools. In his talk he mentioned that, just like electricity, AI is a general purpose technology because its application cases are so many.

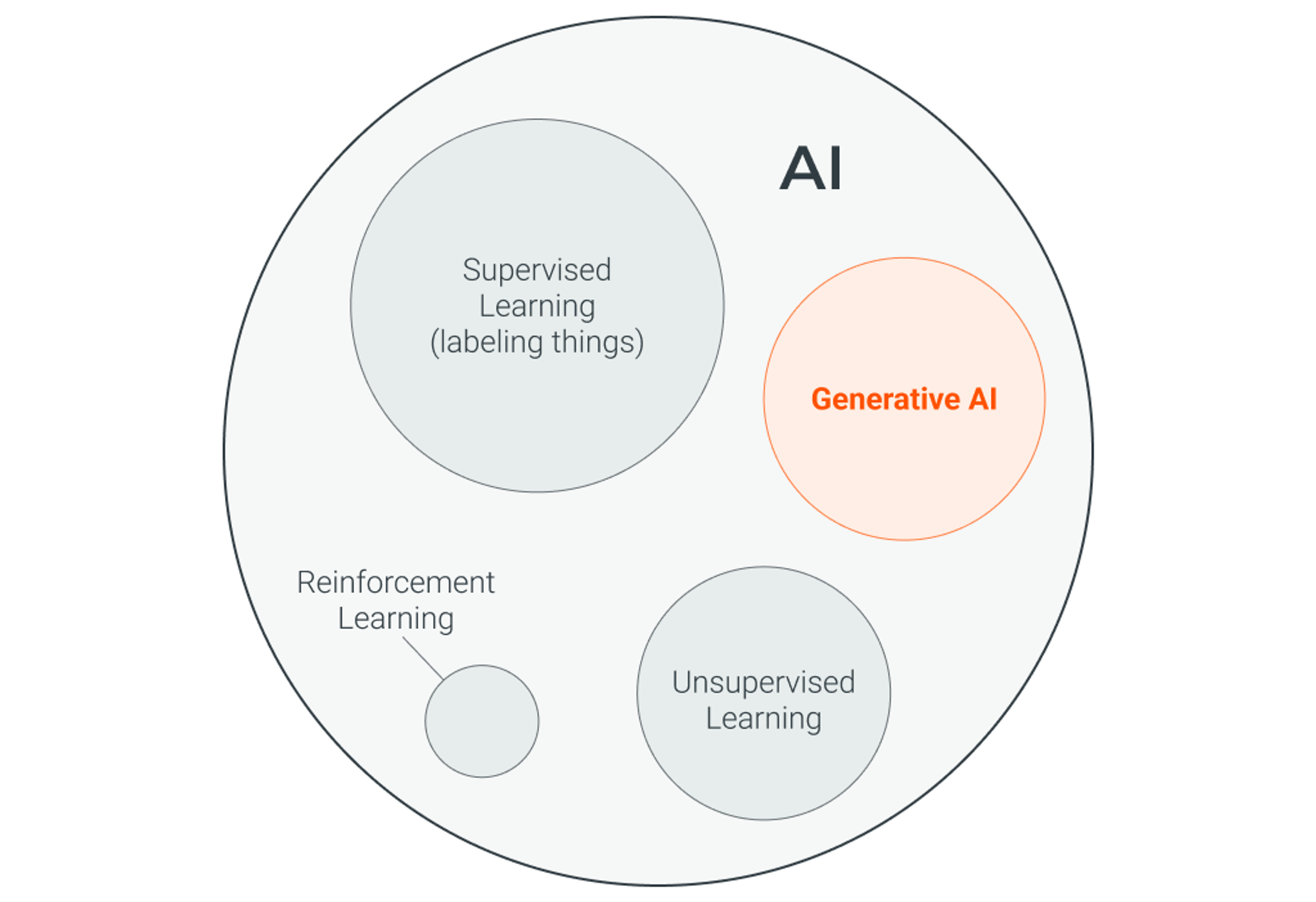

WIth the rise of new AI tools—like Generative AI—humanity has unlocked the ability to solve problems that could not be solved with other AI approaches. We have been talking endlessly about Generative AI for a couple years now, but Generative AI is only a part of the big ecosystem that Artificial Intelligence is.

In the graphic above you can see that Generative AI is only one portion of AI, and not all AI models are generative. AI has gone through many decades of development before we started talking about Generative AI.

We call “traditional AI” the portion of the AI ecosystem that is not Generative AI. Traditional AI performs specific tasks based on pre-programmed algorithms. These mechanisms follow explicit, predefined rules, so traditional AI models are rigid and struggle to adapt to new, unforeseen situations without manual intervention. Traditional AI requires human intervention to update and modify rules based on changing scenarios. It is less agile and adaptable.

Traditional AI models mimic logic reasoning but they do not imitate creativity. For this reason, traditional AI models are excellent at solving well-defined problems and performing repetitive tasks, such as identifying patterns in their input data.

Artificial Intelligence is a broad field, but let’s focus for a moment on the part of AI that is more relevant to this article, which is Machine Learning (ML). Machine Learning takes AI one step further, and enables a model to autonomously learn and improve, without being explicitly programmed. ML models recognize patterns in data and extract knowledge from it, plus they have the ability to produce insights and predictions based on their training data.

Next we will discuss three approaches to Machine Learning, which differ in the learning paradigm they are based on. Each one has unique characteristics and is suitable for solving different problems.

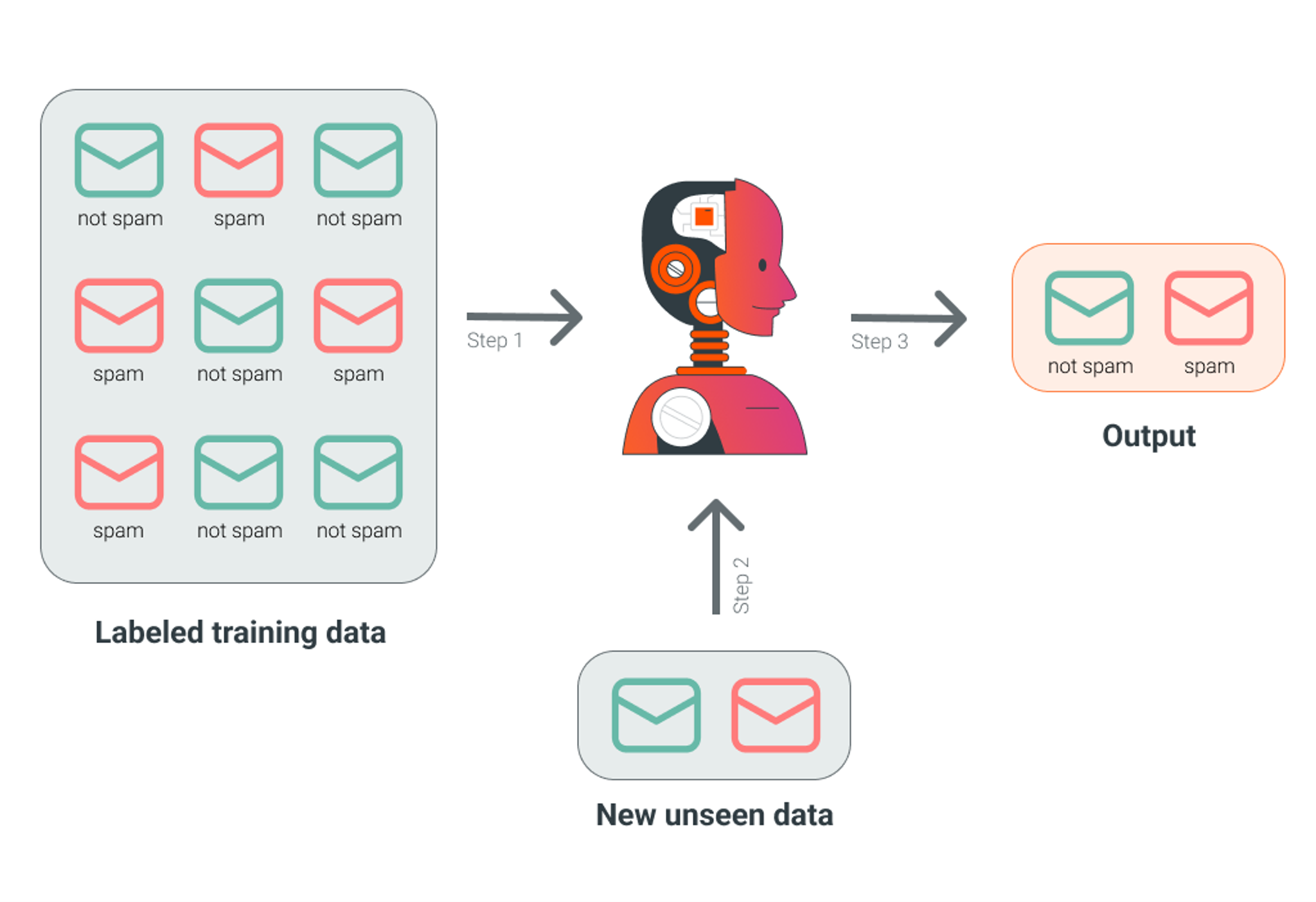

A Supervised Learning model uses labeled training data to map specific inputs to known outputs. This means that the model is trained on a dataset that includes both input data and the corresponding correct output. The training process is iterative: it adjusts itself to minimize error based on the labeled data, learning the relationship between input and output.

Supervised Learning models are useful for tasks like classification or object recognition. A classic use case of Supervised Learning is the identification of emails as spam or not spam. The model is first trained with a list of previously classified emails, and then uses the acquired knowledge to label new emails as spam or not spam.

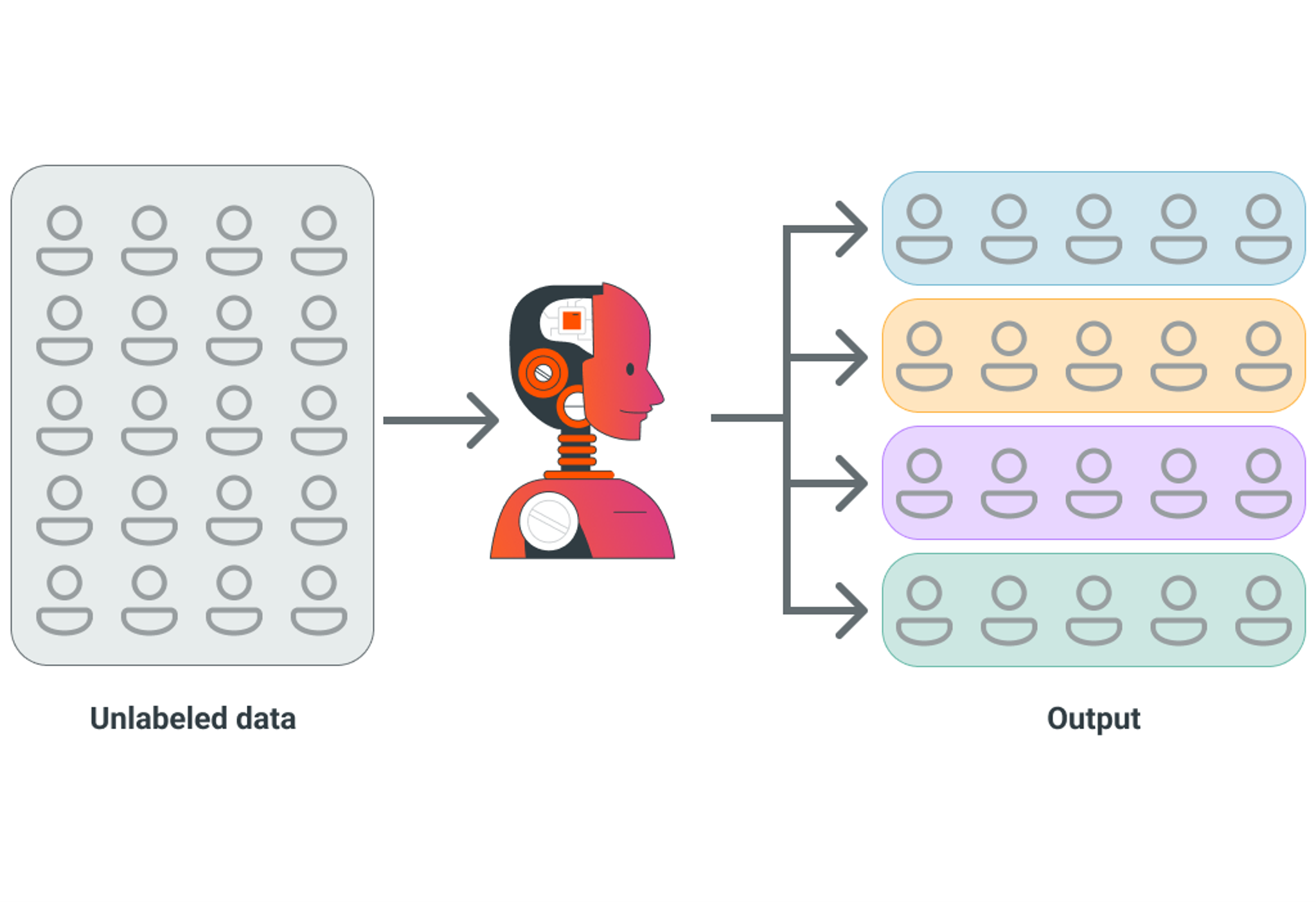

A Unsupervised Learning model uses unlabeled data as input. It then identifies patterns and tries to learn the structure of the data without any guidance or labels provided. This is key to differentiate it from the Supervised Learning approach: in Unsupervised Learning the model has no previous knowledge of the output that a certain input should produce.

Unsupervised Learning is useful to discover patterns and relationships in data. It is often used for exploratory data analysis, trend detection and predictions. A common application of Unsupervised Learning is customer segmentation, where the algorithm analyzes patterns in the customers’ behavior and then identifies distinct customer groups, providing valuable data for targeted marketing, improving customer engagement and sales strategies.

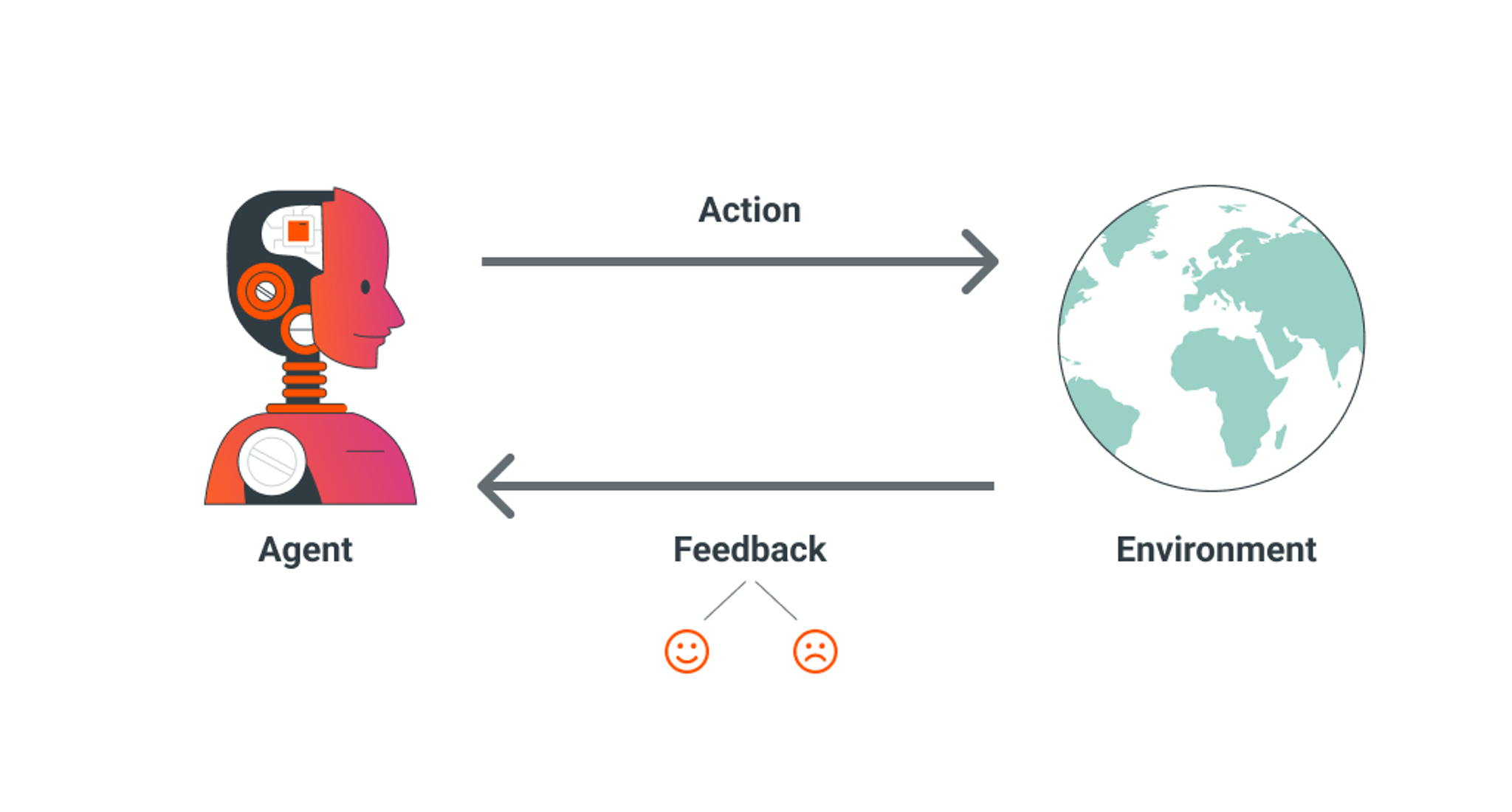

A Reinforcement Learning model involves learning by doing through trial and error, where an agent receives feedback to improve its performance on a defined task. Reinforcement Learning is used in scenarios where a certain result is expected, and the goal of the model is to work its way out to obtain the desired result in an optimal manner.

Reinforcement Learning relies on feedback, which can be positive or negative—rewards or penalties. Based on the feedback, the model focuses on optimizing actions to maximize rewards.

This approach is suitable for dynamic decision-making tasks. Reinforcement Learning is particularly important in robotics, where a robot needs to interact with an unpredictable environment. Self-driving cars are a great example where Reinforcement Learning is used to train autonomous vehicles to navigate roads safely. This technique enables cars to learn optimal driving strategies through trial and error, receiving rewards for safe driving behaviors and penalties for mistakes, improving performance over time.

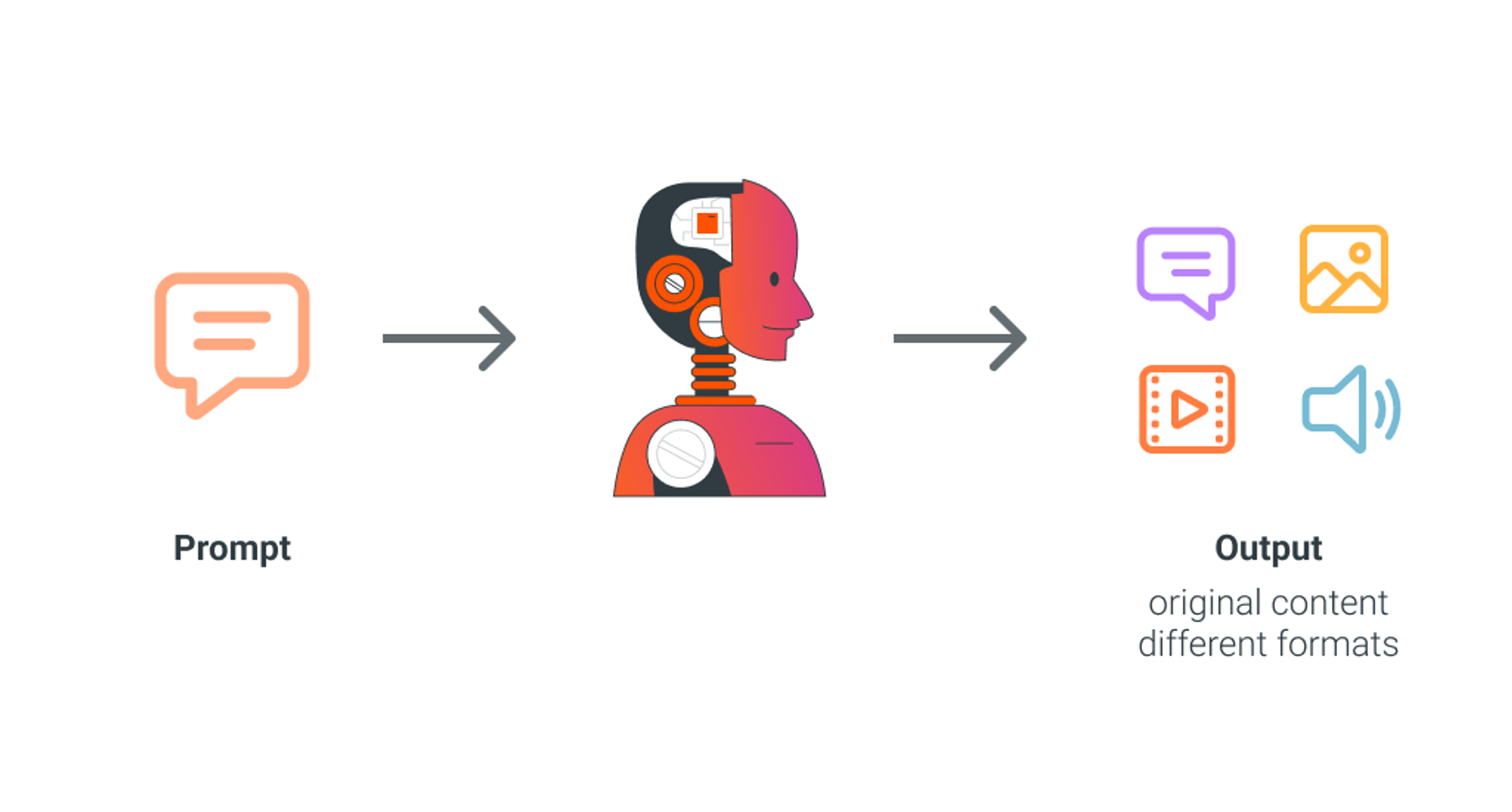

Generative AI is the Artificial Intelligence that is capable of generating new content from its input data (prompt). Let me be clear on this, the output a Generative AI model produces is new: it might have similar characteristics to the data it was trained on, but it is the model’s creation. That is why we attribute creativity to Generative AI models, because they mimic that human skill.

Generative AI models follow instructions and are capable of learning from the context, using a technique called In-Context Learning (ICL). In this case, a user can provide task demonstrations within prompts to the AI, so that it can do things it was not previously trained for. ICL and other model grounding techniques make Generative AI models highly adaptable and capable of handling dynamic scenarios.

Generative AI is most valuable in use cases where original output is needed, such as extracting key takeaways from a text, composing music, or creating images. For example, in CloudX’s recruiting pipeline, we realized we could improve the matching of technical profiles with job descriptions. We built a tool that performs a comprehensive skill matching assessment, providing a score and a justified explanation. This demonstrates how applied Generative AI can enhance daily work.

At the heart of Generative AI lies an innovative tool known as Large Language Models (LLMs). LLMs take a prompt as input and then generate an output based on both their training data and any parameters the model is fed. If you are curious to learn more about how LLMs work, you are welcome to read this other article I wrote.

LLMs anticipate “what word should come next” in their output, so that the content they produce makes sense. At their core, LLMs are trained using Supervised Learning and in some cases RLHF (Reinforcement Learning from Human Feedback). So this gives me room to move on to a very important section in this article.

Imagine the AI ecosystem as a toolbox. Of course you would not use a hammer to install a screw! Just like that, you cannot solve any problem with Generative AI, as well as you cannot solve any problem with a traditional AI approach. Each one of the techniques we discussed in this article complement each other. For some problems you will find that only one approach does the job, but in more complex scenarios, you might have to combine two or more different approaches to craft an effective solution.

From my experience helping a wide array of companies solve problems of different nature, there is not a “one size fits all” approach. The AI landscape is huge, and most times many techniques of AI are combined with classic software development in order to come up with the right solution, tailored specifically for an organization’s problem.

Last but not least, if you would like to explore how you could add value to your company with AI, you are welcome to book a free consultation with us.

Service

We implant AI into your ecosystem in a way that adds real value to your business, designing and implementing a solution specifically tailored for your…

Insights

Why do AI models provide false information? What is the meaning of “AI hallucinations”? Is it possible to tailor LLMs to specific needs? In this article we…

Insights

Is your company turning data into actionable strategies? The Data & Analytics Maturity Curve framework helps assess and evolve your data capabilities. Learn…