Erik Davidsson

Head of AI

Disclaimer: this is not the typical "2026 AI trends" article (it’s better).

In 2026, Generative AI continues evolving, and the hype around it continues growing. Despite some claims that the era of discovery and experimentation is over, our conversations with clients and technology leaders tell a different story. Beneath the headlines and all the buzz, the reality for most organizations is far more complex and interesting.

Yes, some organizations began integrating GenAI into their workflows three years ago and now operate with a level of maturity that sets them apart. But for most, the journey is just beginning. The vast majority are still piloting isolated projects or simply evaluating where AI can deliver measurable business value (if they’ve started at all). Particularly, in sectors where digital transformation has historically lagged, AI remains an aspirational goal rather than a present-day reality.

This pattern is not new, as every transformative technology triggers a wave of ideation and experimentation as organizations seek to understand how the latest innovation can drive efficiency, unlock new value, and ultimately impact on profit. But in a world where 95% of enterprise GenAI pilots never reach production, it’s important to cut through the noise and focus not on what’s trending, but on what will deliver sustainable value for the enterprise.

In this article, I’ll share a pragmatic outlook on what business leaders can expect from AI in 2026, focusing on actionable insights grounded in real-world experience that will help you gain awareness and position your organization for measurable success.

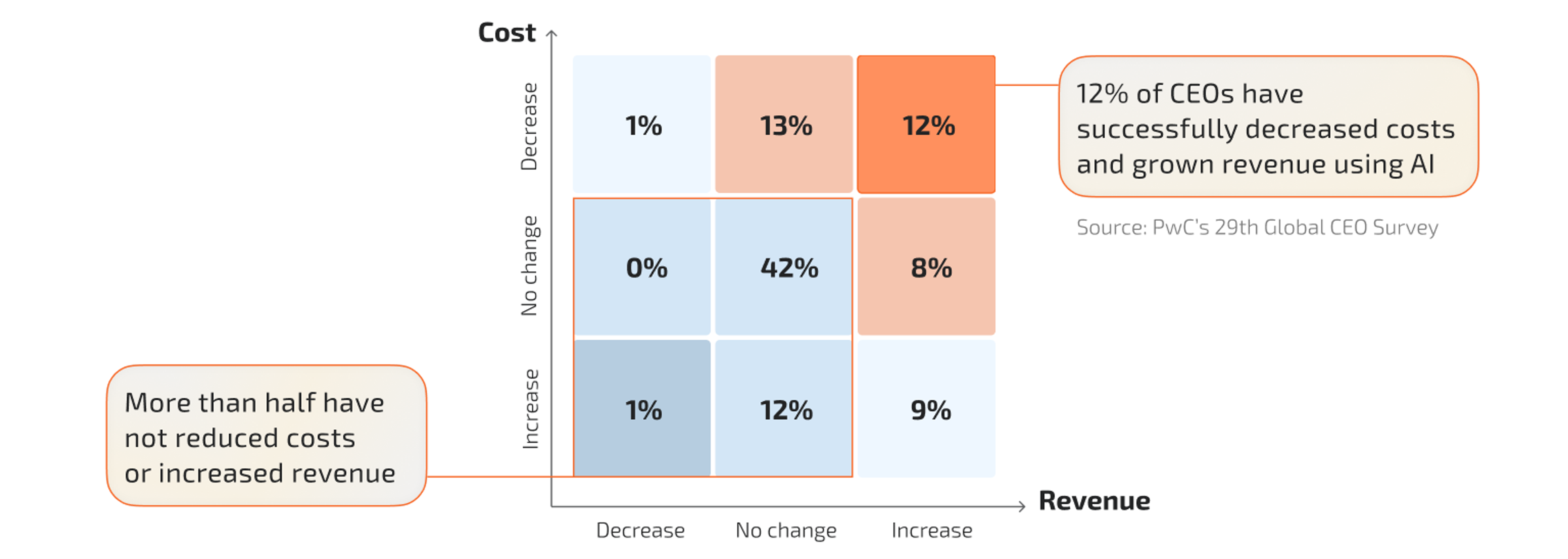

Despite the technical milestones achieved in 2025, the much-anticipated “year of the agents” has not delivered transformative results for most enterprises. PwC’s 29th Global CEO Survey found that most companies aren’t yet seeing a financial return from investments in AI, with 56% of the interviewed CEOs claiming they haven’t realised any revenue or cost benefits. MIT Nanda’s State of AI in Business 2025 report also quantifies this gap: only 5% of organizations have obtained true operational transformation from their AI investments.

This disparity is rooted in several challenges. The primary reason is that most companies are treating AI as a series of isolated projects rather than implementing it at an enterprise scale backed by strategic foundations. In consequence, there’s a “shadow AI economy” rising: according to the MIT, more than 90% of employees now use personal AI tools for work, coming up with solutions using whatever tools are at hand. But without organizational sponsorship, these initiatives remain fragmented and fail to scale: A strategic integration of AI tools in the company enables employees to access specialized tools for the work they need to do, instead of just relying on generic chatbots or agents.

Even when companies do invest in specialized AI solutions, disappointment is common. Many discover that despite significant investment, the agent they’ve deployed remains generic and unable to adapt to their unique business workflows. Agent memory is a recurring pain point: if it’s poorly engineered, agents struggle to retain organizational context, which leads to inconsistent performance and users end up abandoning them.

At the same time, adoption demands a learning curve. Organizations must develop new competencies to work effectively with AI agents. As highlighted in a recent business guide by OpenAI, this process includes learning how to effectively delegate tasks to agents, crafting effective prompts, shifting from task execution to oversight and decision-making, and fostering a culture of supervision and continuous auditing. These are not trivial adjustments; they require a fundamental rethinking of workflows and management practices.

As a result, the vision of hybrid human-agent teams still remains aspirational for most of the workforce. However, there’s one notable exception: software engineering. In this domain, the integration of code assistants is already a standard practice, setting a precedent for what may eventually become possible across other business functions.

In 2026, code assistants are the baseline. Their presence is now assumed in any serious software development effort, and their continued evolution promises even greater efficiency in the years ahead.

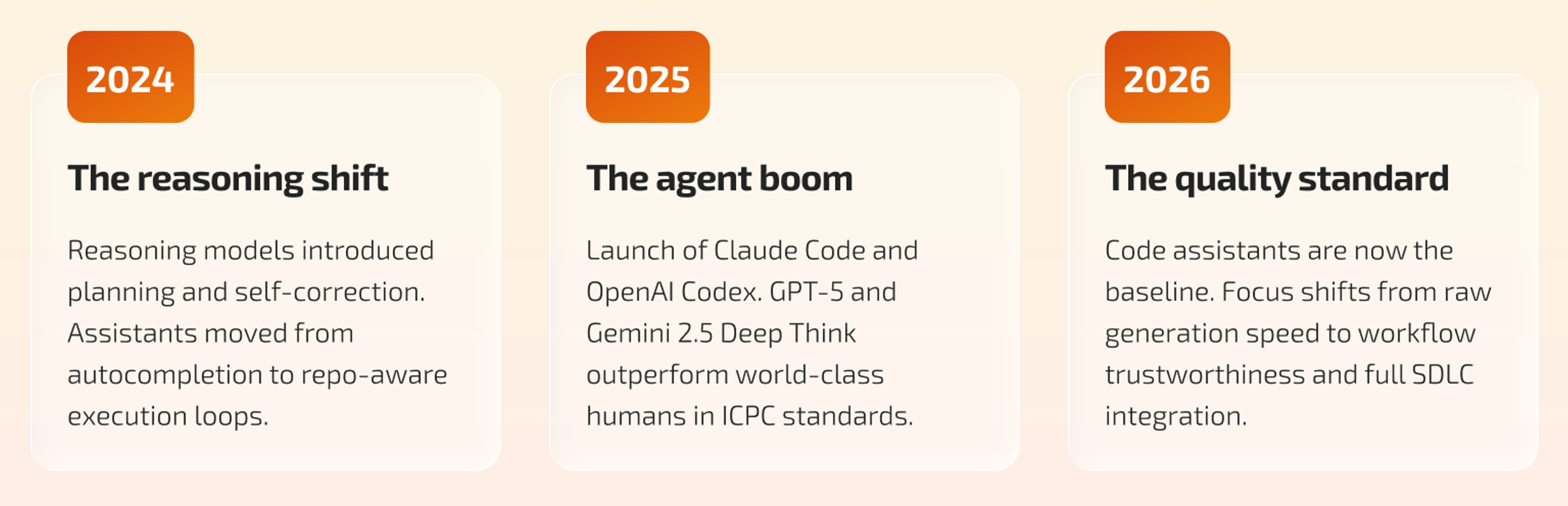

During 2024, the arrival of reasoning models signaled that agents capable of planning and self-correction were imminent. Early code assistants incorporated planning, execution loops, and filesystem interaction under the hood, evolving from simple code autocompletion to repo-aware multi-step assistants. These tools immediately boosted coding power and cut costs, as reasoning enabled agents to map out tasks to be completed by less expensive models.

By February 2025 Anthropic launched Claude Code, one of the first terminal-native code agents. OpenAI’s Codex agent soon followed, and a wave of competitors entered the market. The pace of innovation has only accelerated: models like GPT-5 and Gemini 2.5 Deep Think have demonstrated world-class coding ability, and would have placed first and second in the 2025 International Collegiate Programing Contest (ICPC), the most prestigious coding competition in the world.

Despite these remarkable milestones in AI-generated code, there’s a caveat. As AI agents have dramatically accelerated code generation and deployment, a gap has emerged between the speed of delivery and the trustworthiness of the output. Teams report faster cycle times and higher throughput, but bugs and design flaws introduced by AI are coming up more frequently, sometimes at nearly twice the rate of human-written code. Undoubtedly, faster shipping equals progress as long as the code is high-quality and reliable in production.

As a result, the focus is shifting from raw velocity to quality. The road ahead is clear: velocity gains are only the beginning, and building robust trustworthy development workflows is mandatory in 2026. Also, companies must look beyond coding to fully realize the potential of AI in software engineering. Gartner highlighted that the most significant productivity and quality gains come when AI is integrated across the entire software development lifecycle (requirements gathering, design, testing, deployment, ongoing maintenance).

In the 1960s, Nobel laureate Herbert Simon predicted that over the following twenty years, machines would be able to perform any task a human could. This prediction was a product of early AI optimism in the 60s, when many researchers believed AGI (Artificial General Intelligence) was only a couple of decades away. In 2025, Simon’s quote has often been misremembered or paraphrased as implying that human programmers will no longer be needed. The drop in junior developer hiring has only fueled the myth. Against all odds, programmers don’t only persist, but they continue to be essential in the GenAI era.

GenAI and code assistants are redefining the developer’s role. Software engineers are becoming supervisors of agents, orchestrating complex workflows and ensuring that AI delivers on its promise. This switch frees developers’ valuable time so they’re now able to focus more on what truly moves business forward: building robust and scalable architectures, helping define product features, and brainstorming creative solutions for complex problems. In 2026, organizations that understand this switch will continue leveraging AI as a force multiplier, not a substitute.

Investment in humanoid robotics and embodied AI soared during 2025, with startups raising over $2 billion and 8 unicorns being born. Despite high investor optimism and an accelerated pace of innovation, real-world, scaled deployments are still very rare. Today, the primary buyers of these technologies are researchers and pilot programs, not mainstream enterprises. In 2026 Physical AI will continue to advance, but the journey from prototype to production is longer and more complex than the hype cycle would have you believe.

Vision-Language-Action (VLA) models are powering advancements in Physical AI. While traditional Large Language Models (LLMs) predict the next word, VLA models treat actions as tokens, for example, the angle of a robotic joint or the trajectory of a drone. This approach allows machines to interpret sensory input and natural language, then translate them into coordinated physical operations. In effect, VLA models enable robots and autonomous vehicles to plan and execute sophisticated tasks in the real world.

Products like NVIDIA’s Cosmos and Isaac GR00T are prime examples, combining vision with language reasoning and action planning. In the realm of autonomous vehicles, companies like Wayve are pushing boundaries with end-to-end deep learning, creating AI-powered drivers they claim could be installed in any car.

If there is one word that describes the dark side of AI’s rise, it’s "slop". Slop is the flood of AI-generated content that might be technically correct, but is devoid of substance or originality. I’m on a personal crusade against slop, and I know I’m not alone on that hill.

Slop is a growing threat to productivity and decision-making. It contributes largely to information pollution, forcing humans to sift through irrelevant social posts, blog articles, YouTube videos, and even news stories. Naturally, the workplace is not immune: we’ve all come across AI-generated reports or documents that add nothing but noise, flooding the workplace with mediocrity.

Let’s be clear: AI should absolutely be part of your toolkit. But it must be applied with discernment, where it makes sense. The goal is to make AI work for us, to deliver the results we want and expect, not to settle for generic output. Organizations must prioritize quality and authenticity, invest in training so teams can use AI effectively, and implement robust review processes to ensure AI augments human value, not diminishes it.

Industry leaders and analysts anticipate 2026 will be marked by enterprise consolidation, as organizations move from experimentation to strategic adoption, choosing fewer, more robust AI partners and solutions to drive value. The focus will be on integrating AI in ways that genuinely transform operations and decision-making.

At the same time, adaptability will become a defining trait for both organizations and individuals. The ability to learn and reimagine roles in partnership with AI will certainly be a key differentiator. Ultimately, the most resilient enterprises will be those that invest in upskilling and foster a culture of continuous improvement, making AI a true driver of enterprise value.

Insights

The only constant is change. In life, and also in the world of Generative AI. Advances in AI can render many tools and solutions that are only months old…

Insights

As AI continues to transform the workforce, upskilling and adaptability are fundamental to staying competitive. Organizations face a widening skills gap and…

Insights

Have you ever implemented a solution using Retrieval-Augmented Generation (RAG), only to find it just doesn’t work? Unfortunately, it’s a very common pitfall.…